Data culture and organisational practice

Kostas Arvanitis, University of Manchester, UK, Abigail Gilmore, University of Manchester, UK, Franzi Florack, University of Bradford, UK, Chiara Zuanni, University of Graz, Austria

Abstract

Based on empirical research conducted as part of the Culture Metrics project (http://www.culturemetricsresearch.com/) and drawing on a review of relevant literature and related practices and initiatives across the sector, this paper provides an examination of processes, mechanisms, and challenges for establishing and using data sets in capturing and evaluating cultural performance and experiences. It raises questions about how cultural organisations collect and use data to analyse their practices, discusses frameworks for such data collection and analysis, and examines issues arising from data sharing and benchmarking. Based on this analysis and the experience of the Culture Metrics project, the paper reflects on the emergence of a data culture in organisations for performance measurement, self-evaluation, and data-driven decision making.Keywords: culture metrics, data culture, data-driven decision making, performance measurement, self-evaluation

1. Introduction

Despite advances in the measurement and representation of cultural performance, value, and experience, until recently there has been a lack of progress towards standardised approaches to metrics and the full involvement of the cultural sector in developing those metrics. Also, increasingly cultural organisations (and funders) are looking for methods of data collection, analysis, and reporting that have the capacity to produce bigger data sets at low cost and effort. At the same time, however, there is a perceived difficulty in gathering and harnessing data due to relevant expertise gap in the sector and largely limited understanding of the value, relevance, and usefulness of the data.

Responding to these issues, the Culture Metrics project aimed to test the value of a co-produced and standardised metric set and system of opinion-based data collection, which were devised by a consortium of twenty museums, art galleries, and other arts and cultural organisations in England working with Australian developers Culture Counts. Culture Metrics as a funded project came to an end in summer 2015. During its lifetime, more than seventy test events took place, the analysis of which is still ongoing. We are just starting to make better sense of the issues, challenges, and benefits this work has for both the participating organisations and the sector in general.

Drawing on the research to date (including interviews with cultural partners), this paper examines the processes, challenges, and limitations of establishing and using the Culture Metrics approach in capturing and evaluating cultural experiences. It reflects on the partners’ assessment of the potential of the metrics and the technology and presents some of the key issues that have arisen with regards to the value and usefulness of the data, data sharing, and benchmarking. Finally, the paper raises the question of whether and to what extent we are witnessing the emergence of a new data culture in cultural organisations for performance measurement, self-evaluation, and data-driven decision making.

2. Culture Metrics project: Overview

Culture Metrics was a collaboration between twenty arts and cultural organisations led by the cross-art form venue HOME (previously Cornerhouse), a technology company (Culture Counts), and a research partner (Institute for Cultural Practices, University of Manchester) that ran from September 1, 2014, to July 31, 2015. It was funded by the Digital R&D Fund for the Arts (Big Data Strand), in turn a collaboration between the Arts and Humanities Research Council (AHRC), Arts Council England (ACE), and National Endowment for Science, Technology and the Arts (NESTA). The project aimed to test the value of a co-produced, standardised, and aggregable metric set and system of opinion-based data collection aiming to measure key dimensions of quality of cultural performance and experience.

Culture Metrics built on a pilot project based in Manchester (UK), funded by ACE between July 2013 and July 2014, which was in turn inspired by a similar project initiated by the Department of Culture and Arts in Western Australia in 2010. The pilot aimed to debate and agree on a metrics system that combines self, peer, and public assessment to measure artistic quality and public engagement. This was developed by a consortium of thirteen cultural organisations from the Greater Manchester area, including museums, art galleries, theatre, orchestral music, jazz, cinema, and cross-art form organisations.

Culture Metrics picked up from where the pilot left off. A larger consortium of cultural organisations and Culture Counts teamed up with the University of Manchester to refine the metrics (see tables 1 and 2), develop an online platform to facilitate the use of metrics, and offer an automated way of aggregating, reporting, and visualising data, as well as run a number of events on identified dimensions of “quality” to test both the metrics and the technology involved.

| Dimension | Metric statement |

| Presentation | “It was well produced and presented” |

| Distinctiveness | “It was different from things I’ve experienced before” |

| Rigour | “It was well thought through and put together” |

| Relevance | “It has something to say about the world in which we live” |

| Challenge | “It was thought provoking” |

| Captivation | “It was absorbing and held my attention” |

| Meaning | “It meant something to me personally” |

| Enthusiasm | “I would come to something like this again” |

| Local impact | “It is important that it’s happening here” |

Table 1: core quality metrics: self-, peer, and public assessment

| Dimension | Metric statement |

| Concept | “It was a interesting idea” |

| Risk | “The artists/curators really challenged themselves with this work” |

| Originality | “It was ground-breaking” |

| Excellence (national) | “It is amongst the best of its type in the UK” |

| Excellence (global) | “It is amongst the best of its type in the world” |

Table 2: core quality metrics: self- and peer assessment only

Culture Metrics is based on three main ideas. Firstly, the consistent and ongoing combination of feedback from three different types of audiences (self-assessors, artistic peers, and the public) represents different contexts and perspectives on what is evaluated. The project called this a “triangulation” of data, though there is a question whether this is indeed triangulation and whether this way of conceptualising the value and use of data from different sources is useful, as will be discussed below. Secondly, the use of standardised metrics across organisations potentially allows for comparisons among them and among different kinds of events and over time. And thirdly, the active involvement of cultural organisations in shaping the metrics was seen as fundamental to the development of a credible and representative measurement framework that would be useful to not just the twenty organisations involved, but the UK cultural sector as a whole. As one of the cultural partners of the project noted,

I think what’s nice to us about this is that it’s come from the arts organisations upwards. It’s information that we feel genuinely shouts about all the good things that we do, rather than us just responding to a set of governmental data queries that don’t actually convey the quality, extent and reach of the art that we produce. (interview)

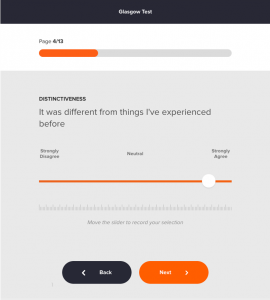

The project developed two main categories of metrics. One focused on “quality”: of product, experience, depth, and engagement, and of creative process. The other looked at organisational health and includes financial metrics, quality and cultural leadership, and quality of relationships and partnerships. The project’s premise is that integrating quality metrics with audience size and profile and financial data allows for a more comprehensive assessment of how organisations balance creative, commercial, and audience objectives. The use of metrics takes the form of a survey (conducted on paper or online), whereby participants are asked to indicate how much they agree or disagree with the metric statements on a sliding scale (see figure 1). Also, self and peers take the survey both before and after the event/activity to assess how the resulted product matches their expectations. Additionally, the survey asks for three words that participants would associate with the cultural event/activity they attended and provides a free comment box for self and peers. Brief demographic information is also added.

By gathering real-time data on the direct responses of artists, peers, and the public and combining it with traditional data on attendances, funding, box office, etc. and secondary demographics of audiences and communities of interest, Culture Metrics aimed to experiment with and, if possible, deliver comprehensive value analysis and reporting on a continuous basis. At the same time, one of the motivations behind the use of standardised metrics and the potential (though not yet agreed) sharing of data is the interest in finding a scalable, cost-effective way of sharing processes for data generation and collection that would allow cultural organisations to benchmark their work with their peers.

A key aspect of the project was the development of a technology that would support an almost seamless everyday use of the metrics and the data generated. The digital platform aims to offer a simple format for self-completion questionnaire surveys, downloadable onto mobile devices and feeding into a reporting dashboard that combines data from its various survey respondents. Data includes response rate, dimension averages, demographics, and peer, public, and self-scores (see figure 2).

The survey summary page includes a live graph of the current dimension scores and a response count. Importantly, the platform gathers data and results in real time and aims to function as an online service interface that offers an automated data reporting mechanism. Although during the test phase the technology partner has been helping the cultural partners in setting up the surveys and collating and reporting on the data, namely following a largely “full-serve” mode, the aim is that in the long term cultural organisations would operate the platform in a “self-serve” mode.

The co-production and combination of data from different stakeholder groups and the potential of the large-scale data to support data-driven decisions within cultural organisations were among the premises and promises of the project that the research partner focussed on. Accordingly, the research strategy of the project (which included literature reviews, interviews with cultural partners, workshops, and a critical friends discussion group) aimed to provide an in-depth and critical examination of the broader contexts and practices of peer review, co-production, and evaluation of cultural performance management. It also aimed to look at processes and challenges of using (big) data sets in capturing and understanding cultural experiences. Ultimately, the research aimed at examining the promise, practices, and limitations of data-driven decision making with reference to the particular approach and technologies used in the Culture Metrics project.

3. Cultural organisations and (big) data

Historically, the cultural sector has engaged with data on cultural performance and audience experience in three main ways. Firstly, organisations are concerned with the production of their own data through quantitative and qualitative methods, including surveys, questionnaires, interviews, observations, online traffic, etc. In recent years, they have been urged to “exploit the opportunities technology offers to collect, analyse and apply data cost-effectively, to learn more about existing and potential audiences and to target marketing and fundraising effectively” (ACE, 2013, 32). However, there seems to be an inconsistent and unsystematic approach to how this data is collected and analysed, how analysis permeates organisational hierarchy and structure, and whether it has any impact on how the organisation reflects on its own practice. The Digital Culture report (ACE, AHRC, & NESTA, 2013) has found that data is being used by cultural organisations in England to understand audiences, develop strategies, improve online offerings, personalise and tailor marketing, and track discussion about organisations online. However, there is great variation across organisations on frequency, consistency, and intensity on such data use: fewer than half of organisations said that they use data to identify and engage with audiences; 41 percent of organisations felt that they are not well served in areas such as data analysis; and 34 percent of organisations with physical venues have a shortage of data analysis skills. Only 10 percent of the survey respondents (the “cultural digerati”) are more proficient in data management and significantly more likely to use data in the development of new services, including data-driven marketing.

The second area is the use and analysis of existing evidence, which has been a common strategy of funders and policy makers (see, e.g., Mowlah et al., 2014). Indeed, using existing data can be a cheaper and more accessible option, especially for smaller organisations (Davies, 2012). In 2011, the Department for Culture, Media and Sport in the UK funded a study that investigated the feasibility of using existing data sets to evaluate the effects of cultural and sporting investment. It concluded that “the availability of relevant data and the nature of that data is the key determining factor in whether an approach could be adopted in the UK” (CASE programme, 2011, 2) and that more data should be made publicly available.

Thirdly, organisations have developed their own analytics strategies (e.g., Villaespesa & Tasisch, 2012) that have enabled them to reflect particularly on online audiences’ behaviours and preferences and their use of cultural content. It is measured though to remember that “analytics is not a consultation like a doctor consultation, or it’s not like a pill that you take and then everybody is back on the same even field. It’s something you have to develop inside your organization, and some organizations are just going to have a better culture than anybody else” (Boucher Ferguson, 2013, 4).

In recent years, there has been an increased investment in big data. By the end of 2012, 91 percent of the biggest American companies had a big data initiative planned or in progress (NewVantage Partners, 2013). This linked to the claim that data-driven decision making in the commercial sector can lead to 6 percent higher productivity gains (McAfee & Brynjolfsson, 2012). Also, the increasing emphasis in the potential of big data has prompted new ways of collecting, storing, and analysing information in the sciences (Cushing, 2013).

This though has not necessarily been the case in the cultural sector, partly because most cultural organisations do not have enough data to call it big and often lack the relevant expertise for analysis (Mateos-Garcia, 2014). Also, gathering and understanding digital cultural data has been suffering from limited investment to an extent that Lilley and Moore state that “the current approach to the use of data in the cultural sector is out-of-date and inadequate” (2013, 3). Or as Suzy Glass puts it, “data is the next big thing in the arts. The problem is that a lot of us don’t really know what to do with it. So we don’t really do anything with it. Or even bother collecting it in the first place. It’s a bit of a hassle to gather the stuff after all. And it’s definitely a bit of a hassle to analyse it” (Digital R&D for the Arts, 2015, 11). Although new methods are being developed to deal with bigger data, such as in understanding the meanings of Facebook “likes” (Evans, 2011; Hanna et al., 2011), there is little evidence that these are being considered, let alone adopted, by the sector.

Culture Counts, the technology partner of the Culture Metrics project, has suggested a threefold classification of “data readiness,” drawing on their experience in working with over 150 cultural organisations in the United Kingdom and Australia:

- “Data shy”: 80 percent of all cultural organisations. They spend very little, or in some cases nothing, on formal evaluation of key aspects of quality.

- “Data ready”: 15 percent of all cultural organisations. They spend modest but non-marginal amounts of money on evaluation activity (estimated at between £3,000 and £10,000 annually), largely to meet funder expectations around grant reporting.

- “Data driven”: 5 percent of all cultural organisations. Some of these organisations are already spending significant amounts of money on evaluation activity (between £15,000 and £45,000 annually). Culture Counts suggests this group are already data-driven in their creative and management practices (Knell at al., 2015).

Although this is not based on statistically robust analysis and is not representative of the UK cultural sector as a whole, it gives an indication of what the “data culture” looks like at the moment.

Mateos-Garcia (2014) recommends four ways in which cultural organisations can increase their interaction with (big) data: broadening and deepening relationships with audiences, creating and measuring value, developing new business models, and encouraging creative experimentation. Indeed, cultural organisations have been experimenting with different ways of creating and analysing data to offer different and enhanced opportunities for audience engagement and enterprise: NoFit State Circus, National Theatre Wales, and Joylab explored the concept of “value exchange” as an approach for collecting data, aiming to address the question “Would audiences exchange their personal information for creative and engaging content that added value to their experience?” (Cunliffe et al., 2014). Also, the Newcastle and Gateshead Cultural Venues network developed a “data commonwealth” in which audience data was used and shared to introduce audiences to the diverse range of activities in their area (McIntyre et al., 2015). Furthermore, responding to the question “why bother in the first place” about open data, Rohan Gunatillake gives the example of the Festivals Listings open data initiative in the performing arts, which offers up-to-date listings from the twelve major Edinburgh Festivals across the year and points out: “if third party developers and organisations can build on your data they can create new value as a result, such as selling more tickets to your events by using your data in a third party listing app that has much better search functionality than your website does” (Digital R&D for the Arts, 2015, 11).

These examples reinforce Lilley’s (2015) point that “whether the data is technically defined as ‘big’ is of comparatively little importance in some ways. It is the use of data-driven approaches to drive insight and change behaviour which matters.” It is telling that what makes big data projects and initiatives both interesting and thought provoking is not always or necessarily the use of “big data,” but the imaginative and often unconventional definition and use of data and data sources (Feldman et al., 2015). Also, there seems to be an agreement that data can be valuable, but there is little understanding of how to measure this value (Bakhshi, 2013); as Holden puts it, “if the methodologies of measurement are inadequate, the results flowing from them are bound to be unconvincing” (2005, 17).

4. The Culture Metrics experience: From “big data” to just “data”

Lilley’s point above about the (currently) comparatively low importance of the size of the data was further confirmed in the Culture Metrics project. There is a fear that the floating rhetoric around big data might prompt institutions to spend more time and effort trying to compile data big enough to be considered “big data,” rather than develop robust processes and methodologies to use and interpret the data and its sources they have access to already. Although big data do promise (and often deliver) opportunities to identify patterns and trends that would not be noticeable otherwise, there is a concern of almost fetishizing such patterns and trends. As Feldman, Kenney, and Lissoni put it in the context of scholarly research, the fear is that:

… scholars will focus on pattern recognition rather than developing theory or engaging in hypothesis driven empirical research. As it becomes easier to manipulate large numbers of records it is seductive to keep collecting more and more observations, matching ever more and more diverse sources – the potential is unlimited. Resources may be diverted to never-ending data projects rather than focusing on questions that are answerable with currently available data (2015, 1630).

In the case of the Culture Metrics project, the promise and the potential of the “big” data did not seem to be in the heart of the cultural institutions’ motivations and aims, neither at the beginning of the process, nor after the first few applications of the metrics in the evaluation of test events. What has been much more important and exciting for the partners was the opportunity to combine, compare and contrast data and data sources—what the project called “data triangulation.” Although cultural organisations, the Culture Metrics’ partners included, seek data from diverse audience sources, this is happening largely in an unsystematic way (Mowlah et al., 2014). Instead, the Culture Metrics’ promise to allow for systematic data generation, aggregation, and reporting via a single digital platform was particularly attractive.

Also, although it might not be “big data,” it is probably safe to say that it constitutes “new data.” The triangulation of different sets of data (from self, peers, and public) signified a new data altogether: a new and different way to evaluate cultural performance and understand people’s cultural experiences. Of course, it is debatable to what extent this is indeed “data triangulation,” in the sense that triangulation aims often to offer a more accurate and comprehensive answer to a single question. Instead, in the case of the Culture Metrics project, one can argue that the aim has been to gain a better understanding of the relationship between context and content of cultural performance’s and audience experience’s data. In other words, the value of the Culture Metrics system is that it is not just comparing evaluation data from self, peers, and public, but also allows cultural organisations to examine how the context of each data source compares against the intentions behind a cultural activity/event and against the results of the evaluation.

This means that one needs to be open about what constitutes data and how the analysis of this data might offer not only additional answers to questions around quality of cultural offer and people’s experiences, but also a different way of conceptualising and articulating this quality. The Culture Metrics project attempted that by defining not just the sources of data, but also the metrics themselves that produce the data, as explained above. That said, it is fair to say that while the project is based on a set of standardised metrics, and although these metrics have been developed by cultural organisations themselves, the “standardization” of the metrics is still ongoing (ACE, 2015). This standardisation does not involve just the decision on the “core metrics” (which has been agreed; see tables 1 and 2), but also the development of additional modular metrics that can be used in different types of organisations and contexts. In the various test events, the project partners experimented with different and bespoke metrics that would need to be examined further to assess their relevance and usefulness. This might lead to a library of metrics and survey questions, where organisations can see how many times the metric has been used, whether it’s recommended, and what the best questions to ask are about quality in different contexts. There is a concern though that the addition of a number of different modular metrics might undermine the premise of standardisation that Culture Metrics has been aiming for, and indeed make the data less “big.” The modular metrics can and will serve individual organisations and their needs, but would probably hinder efforts to benchmark quality and performance across the board.

The issue of benchmarking altogether raised a number of issues in the project. Our research has shown that comparisons across organisations rarely happen, and when they do, they tend to be informal. They also tend to happen in cluster groups (such as museums or festivals) rather than across different types of organisations. Apart from the case of the metrics produced in this project, organisations do not use any other shared metrics with peers, even when co-produced initiatives take place. Such benchmarking would, most probably, involve data sharing with each other (and beyond); in other words, data resulting from the use of the metrics would need to be “open data” for this benchmarking to be possible. Although the project’s cultural organisations supported the “democratisation” of data in principle, they were concerned about the ways this data might be used (or abused) by others. The concern is that the sharing of raw data might make the narrative and culture of their organisation less visible. Instead, partners have proposed that such benchmarking could be done via the sharing of analysis rather than the raw data itself to allow organisations to continue to shape and “tell their own quality stories.”

The above approach to benchmarking favours the use and value of data for internal evaluation. As one of the cultural partners noted, this is “benchmarking against ourselves, that’s why long term data will be interesting.” This suggests that an emerging data culture in organisational practice might be (at least in the first instance) less about the production and use of data and more about self-improvement. As Suzy Glass stresses, “the importance of data isn’t the data by itself. It’s the possibility of self-awareness and self-reflection that it brings to bear to make us better” (Digital R&D for the Arts, 2015, 5). Similarly, one of the cultural partners clarifies:

I don’t think it told us anything we wouldn’t have known but for me the value of this has always been in helping organisations and managements to make better decisions. In other words, in an artistic context you’re always seeking to do something for a certain reason. It may not be for the box office, it may be for an artistic breakthrough, some sort of artistic brand, maybe to bring new things in, but those objectives aren’t the same so in a way, the ways Culture Metrics work is whether what you seek to do and how you seek to do it measured against the public and peer expectation of what you’re seeking to do and why you’re seeking to do it. If they tally then one could argue your plan basis is good. (interview)

This also raises the question to what extent cultural organisations might use the metrics in the activity planning process as a reflective mechanism on what the intentions of their cultural activity are and what they might expect its evaluation to show. This is an interesting proposition, as it suggests that organisations are trying not only to understand their audiences and their preferences, but also “predict” their reactions to individual metrics. As another cultural partner noted:

To me the most useful thing will be to move to a situation where we kind of predict across the course of the year what we’re trying to do with each particular exhibition or programme. (interview)

Similarly, a different partner, referring to one of their test events, gives an example of this process:

… it was an evening of opera excerpts. We weren’t seeking to be groundbreaking, we weren’t seeking to be innovative, so therefore we didn’t score particularly high on that, but that wasn’t troubling […] so, if we were doing something which was basically a standard concert and we had one of the metrics as innovation, it would still be there, we’d ask the public whether we were innovative or not, but we would score ourselves low with the expectation that they would. (interview)

It could be argued that being able to anticipate how audience members and peers might respond to a work is a reflection of the quality and maturity of creative and cultural leadership in an organisation. Also, this reflection and rationalisation of the metrics against possible audience reactions signifies an effort to “humanise,” almost personalise the whole process of data generation and analysis. The organisation aims to “embody” the audience and, perhaps, vice versa.

Furthermore, the cultural partners, who were following an informal and largely inconsistent peer review process, welcomed a more formal structure of peer evaluation to avoid issues around impartiality that any personal relationship with peers might raise. Equally, some self-assessment was already part of the organisations’ structure, but was largely neglected due to other priorities. Accordingly, they are hoping to develop longitudinal data, which will allow them to assess the quality of their work over the period of each year, and across a variety of events. The aim is to integrate this evaluation data from self, peer, and public with other existing data and value frameworks (such as Audience Finder, box office data, etc.), although most organisations are not ready to do so. Combining this data with social media data is also being considered, although the use of social media data to measure quality or performance does not happen in a consistent or structured way. Although social media provides the opportunity for more dialogue with audiences, the cultural partners in Culture Metrics had different levels of understanding of how and whether social media provide data that can contribute to “objective” measurement.

5. Towards a data culture in cultural organisations?

The above analysis raises the question: are we witnessing the emergence of a data culture in cultural organisations for performance measurement, self-evaluation, and data-driven decision making? Are we in front of a shift in the cultural sector where the collection and analysis of data becomes standardised, even professionalised, to not only inform but also drive decisions in both cultural programming and audience engagement and development? As the Culture Metrics’ technology partner has put it:

… the million dollar question about all of this is understanding actually what having access to this kind of data and knowledge enables you to do as an organisation, how you understand and make sense of it and how you respond to it in different ways, so also building up some kind of shared knowledge and discussion around that. (interview)

Referring to the same challenge, Tandi Williams notes that “meaningful use of data begins with a culture of curiosity […] Not everyone will become an analyst overnight (nor should they), but everyone should understand the potential and be open to discovering new insights” (Digital R&D for the Arts, 2015, 12). Similarly, Sophie Walpole calls for organisations to be not necessarily data-driven but “data-savvy, as the rewards can be substantial once you know where to find the right data and how to interpret it” (Digital R&D for the Arts, 2015, 13). Cimeon Ellerton (2015) seems to go beyond the “data-savvy” cultural organisation and does not shy away from the promise of data-driven decision-making. He believes “the future is really here” and current new developments in data tools and approaches can “allow organisations to make that step change from informed and experience-led guess work to data-driven decision-making that actually makes a real deliverable difference. This is not data analysis for the sake of it, but tools that finally make a difference at a practical level.”

According to Culture Counts’ taxonomy, the Culture Metrics partners subscribe to the data-savvy or data-informed culture of curiosity, but would not go (yet) so far to claim that data-driven decisions in the cultural sector are a reality. Not necessarily because data-driven decision making is not possible, but because, at least in the Culture Metrics project’s experience so far, cultural organisations are still grappling with the generation and internal management of data. The lack of data analysis expertise; the existence of data-unfriendly organisational structures; how data translates to embedded knowledge; how this knowledge is shared within the organisation; and how, where, why, and by whom decisions are made—all these are still barriers in understanding the value, usefulness, and limitations of data-driven decision making, let alone its practical applicability.

That said, what the Culture Metrics experience has shown is that cultural organisations have a “thirst” for new types and sources of data that might allow them to reflect on their own practice and understand its impact in a more holistic way. As one of the partners mentioned, “we’re going to look at those three sets of data, it’s the first time we’ll be able to compare different sources of data and a more detailed self-analysis by the actual team that undertook the exhibition to see what it can tell us and if it provides stronger or different forms of data for us” (interview). It seems that this systematic and intelligent use of data is, at the moment, more important than developing a full blown data-driven decision-making approach. As another cultural partner put it:

It’s as if data has always existed in some separate realm which has really been used for accountability purposes. I think we often collected the data because somebody requires us to report on it, rather than collecting the data because it’s actually useful to us and can help us improve our performance and it’s a really obvious thing to say, it seems obvious you should use data for that but I still think most organisations don’t. They do data because they feel obliged to collect it and don’t actually use it in an intelligent way. So I’m very hopeful we’ll be able to finally move into intelligent use of data. (interview)

Also, the discussions in the Culture Metrics workshops have raised the issue of the seductive power of algorithms and “machine learning,” which can intrigue the user and trigger reflection on their part. There is a danger that a partial understanding of how the data is produced and can be read could lead to misconceptions about the data’s validity and value. The more decision making is informed or, indeed, driven by data, the more the technologies, methodologies, and processes of capturing and analysing this data need to be transparent and better understood. Otherwise, “with a sufficiently large sample it is simply easier to find associations and make dubious claims” (Feldman et al., 2015, 1630). Therefore, longitudinal data tracking and the use of more data points (e.g., both in terms of the range of events and audiences evaluated) could ensure a safer and more insightful analysis and comparison of data. As one of the cultural partners noted, “we have to look at how we use the data long-term and how we deal with the high scores that we get, what we do about differentiation; so we need to get more data long-term and that standard deviation from a mean in order to get particularly meaningful data” (interview).

The above suggests that before data-driven decision making becomes an option that creates real change, the generation and analysis of data needs to become part of an organisation’s culture. The Culture Metrics experience has shown that the discussion about cultural performance and quality measurement was less about audit and reporting and more about cultural and creative practice. Partners were interested in understanding what the generation and use of this audience data can do for their daily practice, rather than looking for the “easy wins” (if any) of collating evaluation data for their annual reports. The extent to which this discussion about data becomes a discussion about cultural practice suggests whether a data culture might be forming in a cultural organisation.

6. Conclusion

Culture Metrics aimed to enable the creation of high-volume and high-velocity data on the quality of cultural experiences through a standardised metrics system that would have credibility and relevance to the public, peers, funders, the cultural sector as a whole, and the policy and academic communities. In the process, it aimed to raise the question of whether this approach might lead to an organisational practice around the measurement of cultural performance and experience that is based more systematically and consistently on the generation, aggregation, analysis, and comparison of relevant data. Ultimately, the metrics and the platform aim to create more opportunities for audience and stakeholder feedback and allow the sector to articulate the wider public value of the arts to itself, the public, and other stakeholders, in ways supported by critics of more “top-down” quantitative performance measurement (Walmsley, 2012). As the recent report of the AHRC-led Cultural Value Project (Crossick & Kaszynska, 2016) concludes, “thinking about cultural value needs to give far more attention to the way people experience their engagement with arts and culture, to be grounded in what it means to produce or consume them or, increasingly as digital technologies advance as part of people’s lives, to do both at the same time” (2016, 7), and “the challenge will be to translate the learning into settings where it might enable big data to inform wider agendas concerned with evaluation and value, and this probably requires a significant change for institutions that are used to the analogue world” (2016, 126).

Indeed, the need for a significant change in organisational policy and practice, a paradigm shift, is among the findings of the Culture Metrics project. The fact that Culture Metrics has been sector-led and involved directors and senior managers in its twenty cultural organisations offers the hope that this change might be supported and followed through. There are, of course, a number of issues arising from the Culture Metrics project: for example, around data ownership and sharing; whether and to what extent this is “open data”; the need to consider also the metadata of data collection (e.g., the data about where the technology has failed, what questions people skip, when they take the survey and gaps in the data, etc.); the integration of this data with other related data (e.g., box office, demographics, audience segmentation, social media data); and of course how the gap in relevant data analysis skills and expertise can and would be addressed. All these and more will need to be addressed if a data culture is to materialise and be of any real value and use.

Acknowledgements

Many thanks to NESTA, AHRC, and ACE for funding and supporting the Culture Metrics project, and to Culture Counts and the project’s cultural organisations for participating in the research.

References

ACE (Arts Council England). (2013). “Great art and culture for everyone.” October. Consulted March 20, 2016. Available http://www.artscouncil.org.uk/media/uploads/Great_art_and_culture_for_everyone.pdf

ACE. (2015). “Quality Metrics: capturing the quality and reach of arts and cultural activity” Consulted March 20, 2016. Available http://www.artscouncil.org.uk/what-we-do/research-and-data/quality-work/quality-metrics/

ACE, AHRC (Arts and Humanities Research Council), & NESTA (National Endowment for Science, Technology and the Arts). (2013). Digital culture: how arts and cultural organisations in England use digital technology. Consulted March 20, 2016. Available http://artsdigitalrnd.org.uk/wp-content/uploads/2013/11/DigitalCulture_FullReport.pdf

Bakhshi, H. (2013). “Five principles for measuring the value of culture.” NESTA blogs. November 25. Consulted March 20, 2016. Available http://www.nesta.org.uk/blog/five-principles-measuring-value-culture-0

Boucher Ferguson, R. (2013). “The Big Deal About a Big Data Culture (and Innovation).” MIT Sloan Management Review 54(2): 1–5.

CASE (Culture and Sport Evidence) programme. (2011). The Art of the Possible – Using secondary data to detect social and economic impacts from investments in culture and sport: a feasibility study. July. Consulted March 20, 2016. Available http://www.gov.uk/government/publications/the-art-of-the-possible-using-secondary-data-to-detect-social-and-economic-impacts-from-investments-in-culture-and-sport-a-feasibility-study

Crossick, G., & P. Kaszynska. (2016). “Understanding the value of arts and culture. The AHRC Cultural Value Project.” Consulted March 20, 2016. Available http://www.ahrc.ac.uk/documents/publications/cultural-value-project-final-report/

Cunliffe, D., B. Gamble, J. Nicholls, M. Cawardine-Palmer, & F. Roche. (2014). “Torf. Exploring creative campaigns, using mobile and web technology, to capture audience data and establish ongoing engagement between the audience and the arts organisation.” Consulted March 20, 2016. Available http://artsdigitalrnd.org.uk/wp-content/uploads/2014/06/Torf-Eng.pdf

Cushing, J.B. (2013). “Beyond Big Data?” Computing in Science & Engineering 15(5): 4–5.

Davies, J. (2012). “The data trail.” April 4. Consulted March 20, 2016. Available http://dcmsblog.uk/2012/04/the-data-trail/

Digital R&D for the Arts. (2015). “Making Digital Work: Data.” Consulted March 20, 2016. Available http://artsdigitalrnd.org.uk/wp-content/uploads/2015/06/DigitalRDFundGuide_Data.pdf

Ellerton, C. (2015). “Data Worlds Explored.” Arts Professional. Consulted March 20, 2016. Available http://www.artsprofessional.co.uk/magazine/article/data-worlds-explored

Evans, R. (2011). “To like or not to like: is that the new question?” Journal of Arts Marketing (JAM) 48: 8–9.

Feldman, M., M. Kenney, & F. Lissoni. (2015). “The New Data Frontier.” Research Policy 44: 1629–1632.

Hanna, R., A. Rohm, & V.L. Crittenden. (2011). “We’re all connected: The power of the social media ecosystem.” Business Horizons 54(3): 265–273.

Holden, J. (2005). Capturing Cultural Value: How Culture Has Become a Tool of Government Policy. London: Demos.

Knell, J., C. Bunting, A. Gilmore, K. Arvanitis, F. Florack, & N. Merriman. (2015). HOME: Quality Metrics. Research & Development Report. London: NESTA. Consulted March 20, 2016. Available http://artsdigitalrnd.org.uk/wp-content/uploads/2014/06/HOME-final-project-report.pdf

Lilley, A. (2015). “What can Big Data do for the cultural sector? Audience Finder.” Consulted March 20, 2016. Available http://www.theaudienceagency.org/insight/using-the-evidence-to-reveal-opportunities-for-engagement

Lilley, A., & P. Moore. (2013). “Counting What Counts: What big data can do for the cultural sector.” February 7. Consulted November 14, 2014. Available http://www.nesta.org.uk/sites/default/files/counting_what_counts.pdf

Mateos-Garcia, J. (2014). “The art of analytics: using bigger data to create value in the arts and cultural sector.” NESTA blogs. Consulted March 20, 2016. Available http://www.nesta.org.uk/blog/art-analytics-using-bigger-data-create-value-arts-and-cultural-sector

McAfee, A., & E. Brynjolfsson. (2012). “Big data: the management revolution.” Harvard Business Review 90(10): 60–68.

McIntyre, A., J. Hay, & M. Dobson. (2015). “NGCV: The Unusual Suspects.” Consulted March 20, 2016. Available http://artsdigitalrnd.org.uk/wp-content/uploads/2014/06/NGCV-The-Unusual-Suspects-project-report-V2.pdf

Mowlah, A., V. Niblett, J. Blackburn, & M. Harris. (2014). “The value of arts and culture to people and society – an evidence review.” Consulted March 20, 2016. Available http://www.artscouncil.org.uk/media/uploads/pdf/The-value-of-arts-and-culture-to-people-and-society-An-evidence-review-TWO.pdf

NewVantage Partners. (2013). “Big Data Executive Survey 2013: The State of Big Data in the Large Corporate World.” Consulted March 20, 2016. Available http://newvantage.com/wp-content/uploads/2013/09/Big-Data-Survey-2013-Executive-Summary.pdf

Villaespesa, E., & T. Tasisch. (2012). “Making Sense of Numbers: A Journey of Spreading the Analytics Culture at Tate.” In N. Proctor & R. Cherry (eds.). Museums and the Web 2012. Silver Spring, MD: Museums and the Web. Published April 07, 2012. Consulted March 20, 2016. Available http://www.museumsandtheweb.com/mw2012/papers/making_sense_of_numbers_a_journey_of_spreading.html

Walmsley, B. (2012). “Towards a Balanced Scorecard: A critical analysis of the Culture and Sport Evidence Programme.” Cultural Trends 21(4): 325–334.

Cite as:

Arvanitis, Kostas, Abigail Gilmore, Franzi Florack and Chiara Zuanni. "Data culture and organisational practice." MW2016: Museums and the Web 2016. Published March 20, 2016. Consulted .

https://mw2016.museumsandtheweb.com/paper/data-culture-and-organisational-practice/